In the ever-evolving landscape of cybersecurity, the battle between defenders and attackers is relentless. As security measures become more sophisticated, so too do the methods employed by malicious actors to bypass them. One such challenge for website owners has been the rise of funcaptcha solving—a type of CAPTCHA that aims to distinguish between humans and bots through interactive puzzles. However, as with any security measure, Funcaptcha has faced its share of vulnerabilities. In this blog post, we’ll explore the latest breakthroughs in solving Funcaptcha, shedding light on cutting-edge methods that challenge the effectiveness of this once formidable defense.

Understanding Funcaptcha: Funcaptcha is a type of CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) that presents users with interactive puzzles, such as dragging and dropping objects, identifying images, or solving mini-games. The goal is to distinguish between human users and automated bots by requiring human-like responses to these puzzles. By doing so, websites employing Funcaptcha aim to prevent bots from accessing their services, mitigating various forms of automated abuse, such as spamming, credential stuffing, and DDoS attacks.

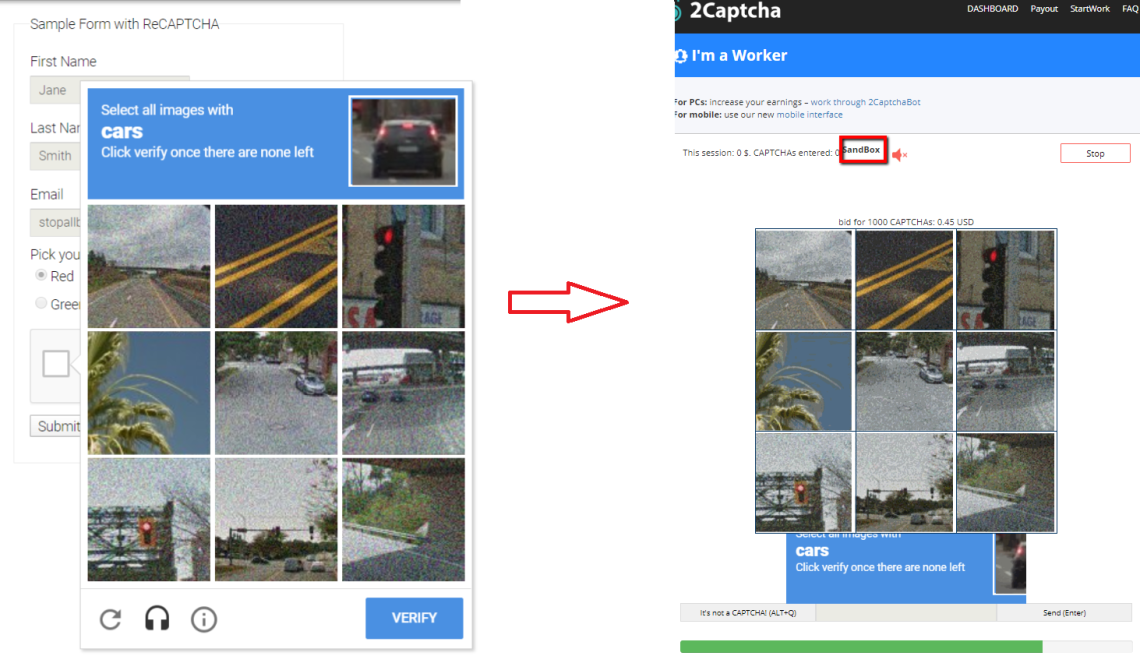

However, despite its intention to thwart bots, Funcaptcha has not been immune to exploitation. Over time, researchers and attackers have developed innovative methods to bypass its defenses, exposing vulnerabilities in its design and implementation. Let’s delve into some of the breakthroughs in solving Funcaptcha that have emerged in recent times.

Breakthroughs in Funcaptcha Solving Methods:

- Machine Learning Algorithms: Leveraging machine learning algorithms, researchers have made significant strides in training models to solve Funcaptcha puzzles with high accuracy. By collecting and analyzing large datasets of Funcaptcha challenges and their corresponding solutions, these models can learn to mimic human-like behavior, effectively solving the puzzles presented by Funcaptcha.

- Reinforcement Learning: Another promising approach involves reinforcement learning, where agents learn to interact with their environment through trial and error, receiving feedback on their actions. By applying reinforcement learning techniques, researchers have developed agents capable of solving Funcaptcha puzzles through iterative experimentation, gradually improving their performance over time.

- Generative Adversarial Networks (GANs): GANs, a type of artificial intelligence model consisting of two neural networks—the generator and the discriminator—have also shown promise in solving Funcaptcha challenges. By employing a generator network to create synthetic Funcaptcha puzzles and a discriminator network to distinguish between real and synthetic puzzles, GANs can generate Funcaptcha solutions indistinguishable from those produced by humans.

- Hybrid Approaches: Some of the most effective solutions to Funcaptcha challenges combine multiple techniques, blending machine learning, reinforcement learning, and other methodologies to achieve optimal results. By leveraging the strengths of different approaches, these hybrid systems can tackle Funcaptcha puzzles with unprecedented accuracy and efficiency.

Implications and Future Directions: The emergence of advanced solving methods for Funcaptcha poses significant implications for website owners and security professionals. As attackers gain access to increasingly sophisticated tools and techniques for bypassing Funcaptcha, the effectiveness of this once-reliable defense mechanism comes into question. Website owners must reassess their security strategies, considering alternative approaches or supplementary measures to defend against automated threats effectively.

Looking ahead, the arms race between defenders and attackers in the realm of cybersecurity shows no signs of abating. As Funcaptcha continues to evolve, so too must the methods employed to solve it. Researchers and security practitioners must remain vigilant, continuously innovating and adapting to stay one step ahead of malicious actors.